Unifying Visual Understanding and Generation

Introducing VARGPT-v1.1

VARGPT-v1.1 builds upon the original VARGPT framework to advance multimodal AI. This updated model retains the dual paradigm approach: using next-token prediction for visual understanding and next-scale generation for image synthesis. The model represents a significant evolution in unified visual autoregressive systems.

Four key technical innovations distinguish VARGPT-v1.1:

A multi-stage training paradigm combining iterative visual instruction tuning with reinforcement learning through Direct Preference Optimization (DPO)

An expanded visual generation corpus of 8.3 million instruction pairs (6× larger than v1.0)

Enhanced visual comprehension through migration to the Qwen2-7B backbone

Architecture-agnostic fine-tuning enabling visual editing capabilities without structural modifications

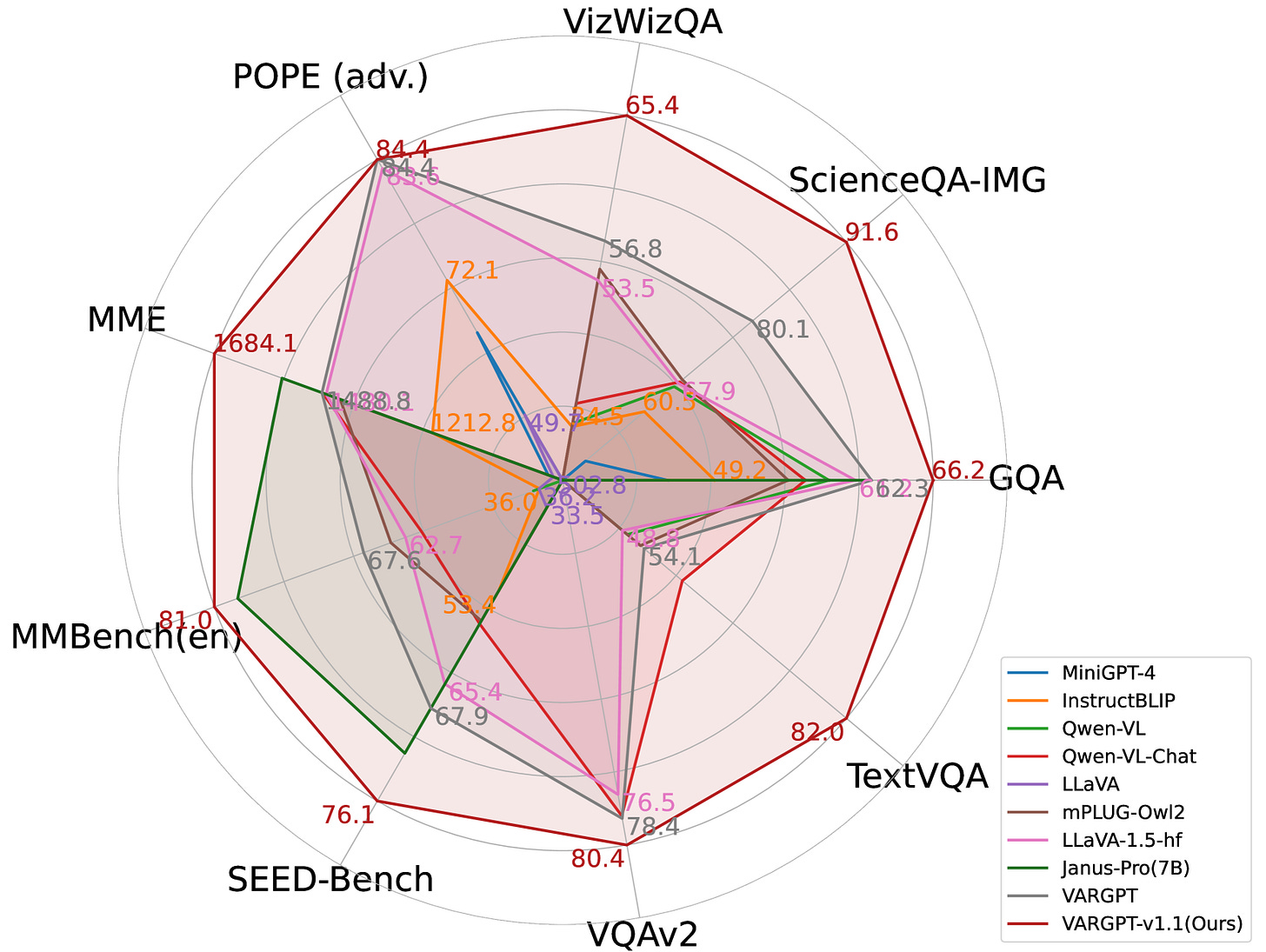

A comparative analysis showing VARGPT-v1.1's performance across visual comprehension benchmarks. The model demonstrates significant superiority over compared baselines across all metrics.

These innovations have enabled VARGPT-v1.1 to achieve state-of-the-art performance in both visual understanding and generation tasks, while demonstrating emergent capabilities in image editing without requiring architectural modifications.

The Landscape of Multimodal AI: Where VARGPT-v1.1 Fits In

Recent advancements in multimodal AI have achieved breakthroughs in both comprehension and generation. Multimodal Large Language Models (MLLMs) excel at cross-modal understanding, while Denoising Diffusion Models dominate visual generation through iterative refinement.

Three primary paradigms have emerged in the pursuit of unified frameworks:

Assembly Systems - integrating LLMs with diffusion models

Pure Autoregression - architectures predicting visual tokens

Dual-diffusion Models - with parallel generation mechanisms

Comparison of different model architectures for visual tasks. VARGPT-v1.1 follows a purely autoregressive multimodal approach, using next-token prediction for comprehension and next-scale prediction for generation.

Current implementations struggle with representation conflicts between understanding and generation tasks. While models like TokenFlow unify tokenization, their visual generation and understanding pipelines remain largely decoupled.

Keep reading with a 7-day free trial

Subscribe to AIModels.fyi to keep reading this post and get 7 days of free access to the full post archives.

![Comparison of different model architectures, where, 'AR' denotes autoregressive, while 'VAR' signifies visual autoregressive. We present a comparative analysis of architectures designed for comprehension-only tasks, generation-only tasks, and unified comprehension and generation, alongside our proposed VARGPT-v1.1 an VARGPT [14] model. Our VARGPT-v1.1 and VARGPT are conceptualized as purely autoregressive multimodel model, achieving visual comprehension through next-token prediction and visual generation through next-scale prediction paradigms. Comparison of different model architectures, where, 'AR' denotes autoregressive, while 'VAR' signifies visual autoregressive. We present a comparative analysis of architectures designed for comprehension-only tasks, generation-only tasks, and unified comprehension and generation, alongside our proposed VARGPT-v1.1 an VARGPT [14] model. Our VARGPT-v1.1 and VARGPT are conceptualized as purely autoregressive multimodel model, achieving visual comprehension through next-token prediction and visual generation through next-scale prediction paradigms.](https://substackcdn.com/image/fetch/$s_!s4RM!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fca4c7d97-c2b5-49a2-9ee6-b51b6290f41e_1661x401.png)