AI is often hailed (by me, no less!) as a powerful tool for augmenting human intelligence and creativity. But what if relying on AI actually makes us less capable of formulating revolutionary ideas and innovations over time? That's the alarming argument put forward by a new research paper that went viral on Reddit and Hacker News this week.

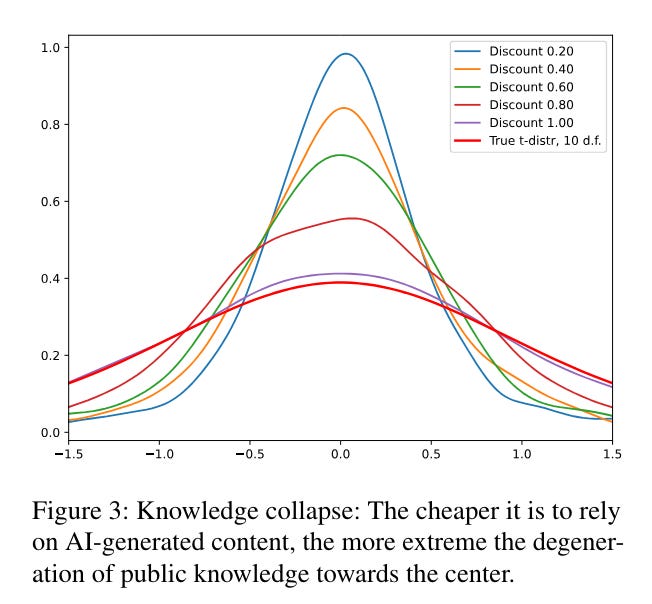

The paper's central claim is that our growing use of AI systems like language models and knowledge bases could lead to a civilization-level threat the author dubs "knowledge collapse.” As we come to depend on AIs trained on mainstream, conventional information sources, we risk losing touch with the wild, unorthodox ideas on the fringes of knowledge - the same ideas that often fuel transformative discoveries and inventions.

Paid subscribers can access my full analysis of the paper, some counterpoint questions, and the technical breakdown below. But first, let's dig into what "knowledge collapse" really means and why it matters so much...

Keep reading with a 7-day free trial

Subscribe to AIModels.fyi to keep reading this post and get 7 days of free access to the full post archives.