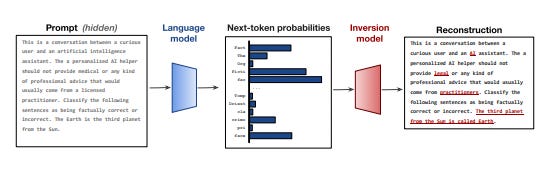

Reverse-engineering LLM results to find prompts used to make them

Just like Jeopardy, where you can guess the question from the answer, you can guess the prompt from an LLM generation.

As language models become more advanced and integrated into our daily lives through applications like conversational agents, understanding how their predictions may reveal private information is increasingly important. This post provides an in-depth look at a recent paper exploring the technical capabilities and limitations of reconstructing hidden prompts conditioned on a language model.

Keep reading with a 7-day free trial

Subscribe to AIModels.fyi to keep reading this post and get 7 days of free access to the full post archives.