Meta found a way to secretly watermark deepfake audio

Researchers have found a way to imperceptibly watermark fake audio

The rapid advancement of AI voice synthesis technologies has enabled the creation of extremely realistic fake human speech. However, this also opens up concerning possibilities of voice cloning, deepfakes, and other forms of audio manipulation (this recent fake Biden robocall being the first example that comes to mind).

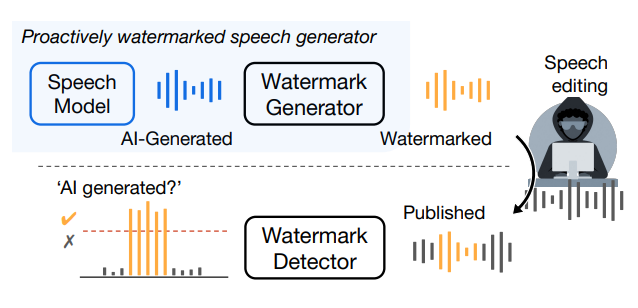

Robust new detection methods are needed to find and segregate audio deepfakes from real recordings. In this post, we'll take a look at a novel technique from Facebook Research called AudioSeal (github, paper) that tackles this problem by imperceptibly watermarking AI-generated speech. We'll see how it works and also take a look at some applications and limitations. Let's go!

Keep reading with a 7-day free trial

Subscribe to AIModels.fyi to keep reading this post and get 7 days of free access to the full post archives.