GPT-4 Doesn’t Know It’s Wrong: An Analysis of Iterative Prompting for Reasoning Problems

How real are these performance gains from self-reflection?

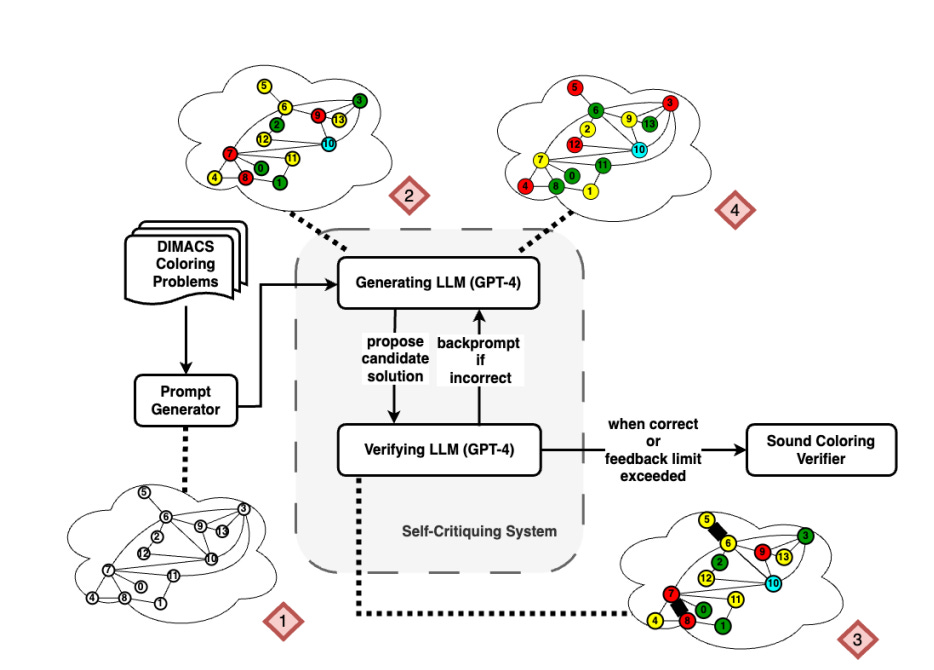

There has been tremendous enthusiasm around large language models (LLMs) like GPT-3 and GPT-4 and their potential to perform complex reasoning and language tasks. Some researchers have claimed these models exhibit an "emergent" capability for self-reflection (like SELF-RAG) and critique that allows them to improve reasoning performance over multiple iterations of prompting.

How real are these performance gains from self-reflection? A rigorous new study attempts to quantitatively answer just how effective iterative prompting truly is for boosting reasoning skills in LLMs.

Subscribe or follow me on Twitter for more content like this!

The promise and hype around iterative prompting

The core premise behind using iterative prompting for reasoning tasks is that it should be easier for LLMs to verify solutions than to generate them from scratch. So by having the model critique its own solutions iteratively, its reasoning performance should improve over multiple rounds.

Keep reading with a 7-day free trial

Subscribe to AIModels.fyi to keep reading this post and get 7 days of free access to the full post archives.