Format your numbers like this to dramatically improve your LLM's math skills

Positional description matters for transformers arithmetic

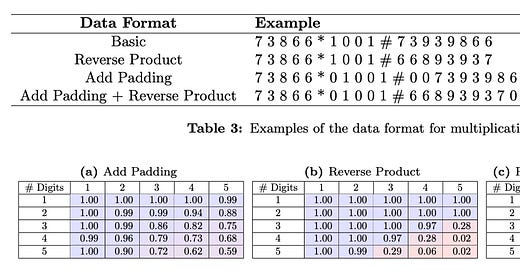

Arithmetic is an important yet challenging domain for LLMs. While they have achieved tremendous success in natural language, their performance on arithmetic is often lacking. In this blog post, we'll summarize a recent technical paper that dives deeper into transformers' capabilities and limitations with arithmetic. Key findings include a new insight into positional encoding and techniques to enhance models' multiplication and addition skills. Let's begin!

Keep reading with a 7-day free trial

Subscribe to AIModels.fyi to keep reading this post and get 7 days of free access to the full post archives.