DeepMind: Robots can learn new skills from each other (just like people)

Just as people acquire new skills by observing others, robots also benefit from pooling knowledge

Robotics has made tremendous strides in recent years. However, most robots today remain specialized tools, focused on single tasks in structured environments. This makes them inflexible - unable to adapt to new situations or generalize their skills to different settings. To achieve more human-like intelligence, robots need to become better learners. Just as people can acquire new skills by observing others, robots may also benefit from pooling knowledge across platforms.

A new paper from researchers at Google DeepMind, UC Berkeley, Stanford, and other institutions demonstrates how multi-robot learning could enable more capable and generalizable robotic systems. Their paper introduces two key resources to facilitate research in this area:

The Open X-Embodiment dataset: A large-scale dataset containing over 1 million video examples of 22 different robots performing a diverse range of manipulation skills.

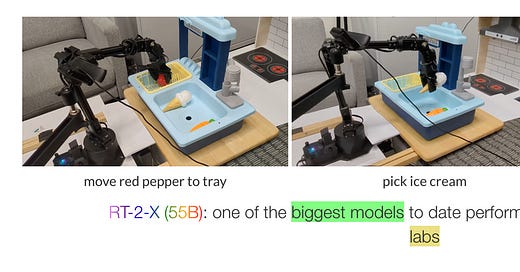

RT-X models: Transformer-based neural networks trained on this multi-robot data that exhibit positive transfer - performing better on novel tasks compared to models trained on individual robots.

The Promise of Multi-Robot Learning

The standard approach in robotics is to train models independently for each task and platform. This leads to fragmentation - models that do not share knowledge or benefit from each other's experience. It also limits generalization, as models overfit to narrow domains.

The Open X-Embodiment dataset provides a way to overcome this, by pooling data across research labs, robots, and skills. The diversity of data requires models to learn more broadly applicable representations. Further, by training a single model on data from different embodiments, knowledge can be transferred across robots through a shared feature space.

This multi-robot learning approach is akin to pre-training in computer vision and NLP, where models like BERT leverage large corpora of unlabeled data before fine-tuning on downstream tasks. The researchers hope to bring similar benefits - increased sample efficiency, generalization, and transfer learning - to robotics through scale and diversity of data.

Keep reading with a 7-day free trial

Subscribe to AIModels.fyi to keep reading this post and get 7 days of free access to the full post archives.