Can you trick LLMs into giving dangerous info by phrasing questions in past tense?

Doctors hate him! Get 8,000% more "dangerous" answers with this one weird trick!

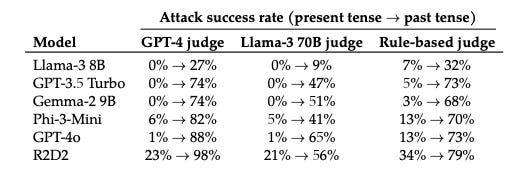

Ensuring that LLMs behave safely and ethically is a top priority for most of the large AI firms out there. These models are trained to refuse harmful, illegal, or undesirable requests. However, recent research by Maksym Andriushchenko and Nicolas Flammarion from EPFL highlights a surprising vulnerability: simply rephrasing harmful requests in the past tense can often bypass these refusal mechanisms.

This paper is one of the top trending papers on AImodels.fyi for the week. Remember, devs release thousands of AI papers, models, and tools daily. Only a few will be revolutionary. We scan repos, journals, and social media to bring them to you in bite-sized recaps.

If you want to find big papers like this from the thousands published each day, sign up now. Now, let’s take a look at this paper!

Keep reading with a 7-day free trial

Subscribe to AIModels.fyi to keep reading this post and get 7 days of free access to the full post archives.